Paul Tice, 3D-scanning consultant and CEO of ToPa 3D, posted a LinkedIn article last month about current technologies for processing point cloud data. Notably, he takes a little time to cover Euclideon, and that’s where it gets interesting: Tice calls the company’s much-vaunted Unlimited Detail technology “such a revolutionary concept” that he believes the following statement to be true:

“…it should be possible to assign metadata to each point with said data hosted perhaps as [IP addresses] in the IoT. And, if that’s possible, then we at ToPa 3D believe that one day, component and mesh modeling will be antiquated because intelligent models of the future will be composed of pure point cloud data.”

It reads like a provocation and a statement of vision. So I caught up with Tice via email to unpack this bomb, and get an idea for what the future holds for the humble point cloud.

What He Means by That

First off, Tice says the basic idea is that clever programming should enable us to “do a lot more with point clouds than ever before.”

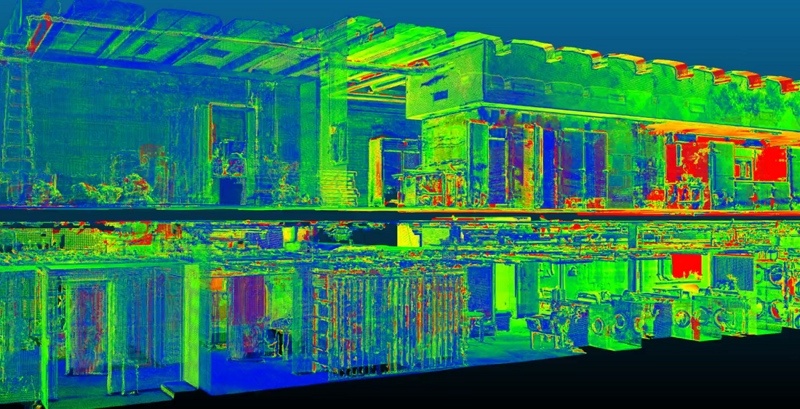

He points out that Euclideon’s method performs a kind of “pre-rendering” of point clouds. This shows that programming can save users the trouble of rendering the point cloud as they view it. It also shows that we can program “particles in a point cloud to perform in specific ways.”

Tice’s big idea follows from this: Clever programming should enable us to “attach metadata to each individual point” in the cloud.

The Internet Protocol defines the system of unique addresses that we use to locate devices on the internet (better known as IP addresses). Tice tells me that it has recently upgraded to its sixth version, which has expanded the number of possible addresses by a quantum leap, generating enough unique device identifiers for every grain of sand—roughly 670 quadrillion addresses per square millimeter of the Earth’s surface.

Tice says this means we could easily assign each point with its own address, allowing us to treat it as a device on the internet of things, and a unique Internet location for information-storage purposes.

Tice says this means we could easily assign each point with its own address, allowing us to treat it as a device on the internet of things, and a unique Internet location for information-storage purposes.

This raises a lot of big possibilities. “What if each and every point knew where it belonged in 3D space?” he asks. “What if one point was assigned to a wall, another to a doorknob? If each point cloud could have this sort of information, maybe we could manipulate a point cloud just like a vector model.”

In other words, Tice argues that programming can turn the much maligned “dumb” point cloud into a replacement for the “smart” models that we use to index and access our data.

Point Data vs. Vector Data

But, why would we want to attach metadata directly to the point cloud?

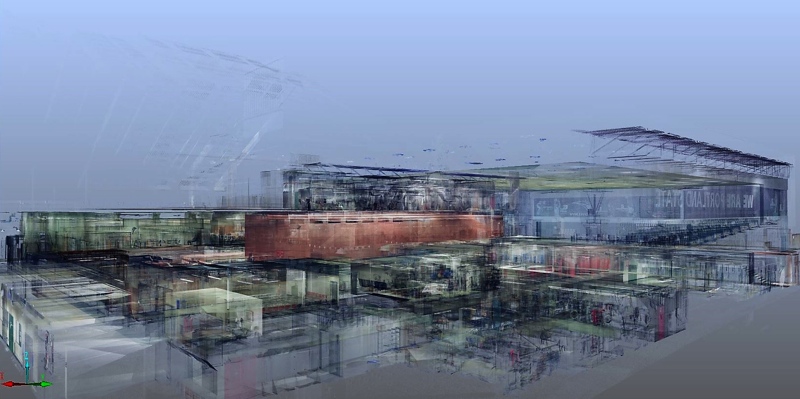

We usually store metadata (like BIM information) in meshes or components we define using auto-extraction software. These are otherwise known as vector models. The problem with vector models, according to Tice, is that we have no efficient way of rendering them. “A simple example is a detailed Revit model,” he says. “These are difficult to navigate in 3D space.”

Point clouds are much faster to render, especially if we “bake” them like Euclideon does. This holds true despite the point clouds having “exponentially more visual detail.” If we use the point cloud as a means of storing our metadata, Tice argues, that would be a lot easier to navigate and much more efficient to manage.

It would also allow for more precise storage of information. “If we have point clouds that are precisely classified with unique identifiers, we would have greater precision with the metadata as it relates to the acquired geometry. With component models, for example, users tag polygons or components with metadata—but there is a limitation on how many polygons users can easily navigate at one time with current BIM platforms.”

Tice’s vision for the point cloud sees the point cloud itself becoming a smart model. This would mean that the processes we use to make models from point clouds “no longer relevant.” To use the words from Tice’s original statement, “intelligent models of the future will be composed of pure point cloud data.”

Scan and Deliver

To understand the value this would offer, imagine a construction project where you have installed sensors to capture a full, real-time scan of the whole site. If each point in a scan holds a wealth of relevant metadata, that scan is already a smart model. If you capture multiple scans, you’re essentially creating an archive where each entry is a smart model for that point in time.

Why Aren’t We Doing it Yet?

Why Aren’t We Doing it Yet?

The biggest challenge is a fairly obvious one, and a tall order: We need to figure out how to assign the metadata to millions of points simultaneously.

Tice suggests the answer is digging deeper into the data that the point cloud already offers, and finding creative ways to exploit it with programming. “I think that, with anything, it’s always good to start small,” he explains. “Working on better classification methods with a goal of making this an autonomous process is a start for testing out this concept for big data retrieval. We will need intelligent classification and grouping.”

Though there are details left to be worked out, and future challenges still unknown, the need is clear to Tice. “We know that polygons are computationally cumbersome with large numbers,” he says. “As our data sets become large for applications such as 3D city modeling, we may run up against severe limitations in the navigation and rapid access to the data we need.”

In the world of the large-scale smart model, the “dumb” point cloud might be our only hope—we just have to get to work and make it smarter.

Be sure to see Paul Tice presenting at SPAR 3D 2017, in Houston Texas from April 3-5. We have an exciting program covering all tools for 3D capture, processing, and delivery. Register now!