Penn State University set out to create a virtual model of its campuses. This “Virtual Penn State” would act as a single interface into the numerous data sources the school relies on for day-to-day and long-term operations. It would ensure that workers in the field have access all the asset data they need to do their jobs effectively.

That goal was common enough, but the obstacles weren’t. Penn State owns and maintains around 2,000 different buildings and structures covering a few million square feet of space. These assets are spread over 21 campuses, the largest of which fills 79 acres and serves 40,000 students at any given time.

How could Penn State integrate information about all these assets into a single system?

Further Challenges

Four years ago, Penn State started working on its digital model by gathering BIM data for each new building—including CAD design models, construction models, and even an asset list. But the school quickly understood that BIM data sets wouldn’t be enough for their employees, since they lacked information on any structure more than four years old. At Penn State, this fact was crucial, since a full 65% of its buildings were built more than 25 years ago.

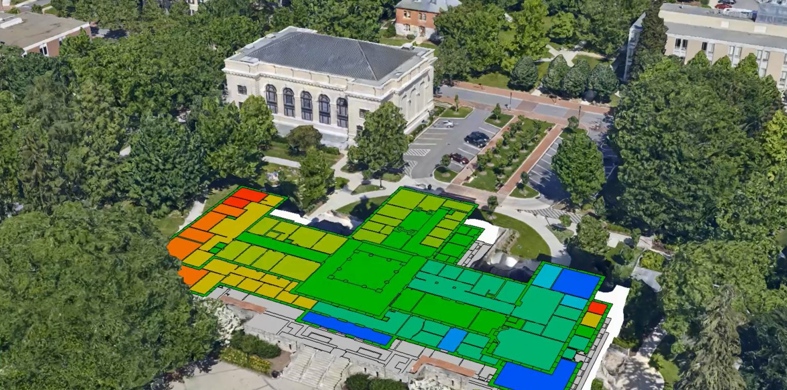

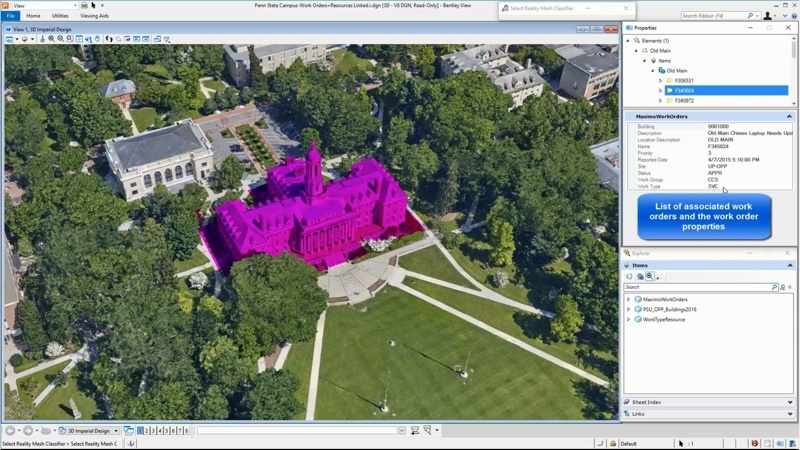

Penn State used 3D reality meshes as an interface for asset data–right down to information about individual rooms

Penn State knew that even adding GIS data—which includes utilities, trees, buildings, streets, and more—wouldn’t be enough. The digital model would still lack the crucial information stored in the school’s custom enterprise data systems. Penn State had to integrate these systems into the final model, since they were the trusted source for asset information about finances, space management, maintenance, commercial maintenance management, project management, and more.

In other words, Penn State’s digital model project faced a serious data-integration problem. The school had a high volume of disparate information to index. Worse, the information resided in a number of different systems and was saved in different formats.

The problem was extremely complex, but the answer was surprisingly simple: Tie it all into a 3D reality mesh.

How Penn State Did It

Penn State started with its largest campus, University Park. A team flew a Cessna at 1,000 feet and took 2,400 aerial photographs. Next, they used Bentley’s ContextCapture photogrammetry software to process the imagery and generate a 3D reality mesh.

To tie their different data sets to the mesh, Penn State leveraged Bentley’s i-model Transformer technology. They used the software to query their BIM and custom information systems. Next, they integrated the results with GIS information, which enabled them to connect the data to the 3D reality mesh.

The result is a rich visual interface that integrates Penn State’s many disparate data sets—and makes the information easy to access.

Select a building and the interface shows associated work orders.

Here’s How the Interface Works

A worker in the field selects a building on the 3D reality mesh. Now she can simply pull up whatever information she wants for that building, like a CAD design model or some details from the legacy facility information system. Another worker clicks on the auditorium. He can pull up information about a job to learn about the electrician who has been assigned, who will oversee the work, the status of the work order, what documents are attached to the work order, and so on.

This illustrates an important benefit of the 3D reality mesh. It not only offers a platform for integrating all these separate information systems into a single interface, but it does so in a way that is very intuitive. When a user wants information about a vent, she doesn’t have to know its unique code, for instance its tracking number. She can simply find the vent on the digital campus model, click on it, and get all the information she needs in one place.

Unexpected Benefits

As impressive as Penn State’s progress is, the school is still finding new ways to use this 3D reality mesh interface.

For instance, it has developed a system that allows more complex queries. Using Bentley’s MicroStation, a worker or administrator can pull all the work orders that are overdue, and then have their locations highlighted on the 3D reality mesh.

Select search criteria and the interface shows all matching buildings.

The school has also started to take floor plans for their buildings, convert them to a GIS layer, and tie them into the model. This means the reality-mesh interface can get more granular and offer access to data about each individual room. Workers can now see how large a room is, who is using it, who has used it in the past, and more.

Maybe most impressively, Penn State is considering connecting their building automation systems and security systems to the interface. In the near future, the school’s 3D reality mesh interface could offer access to the current temperature in a room, its average temperature over time, or even show whether a security sensor has been tripped.

Futuristic Possibilities

It’s clear that the 3D reality mesh interface Penn State developed is a lot more than a means for accessing previously recorded data about its assets. It’s also the first step toward a system that provides them with asset data in real time, the digital twin that sets the groundwork for giving workers up-to-the-second information about buildings, structures, and utilities.

With that kind of data, they can get proactive. They can start to focus on optimizing the school’s assets so they run as smoothly as possible for as long as possible. As we all know, that’s where the real value is.