At SPAR 3D 2017, Bentley Systems is releasing an extensive update to its reality modeling solutions, adding new options for capture, processing, visualizing, and consuming 3D data across the full Bentley ecosystem.

In advance of the big release, SPAR 3D sat down with Francois Valois, Bentley’s senior director of software development for reality modeling. He gave us a quick overview of the updates. Each heading below is a new announcement from Bentley.

ContextCapture Adds Aerial and Mobile Lidar Processing

Last November, Bentley updated ContextCapture to process point clouds in addition to photos when generating a 3D reality mesh. Now, a new update enables users to input aerial and mobile lidar—including point clouds generated by indoor mapping devices.

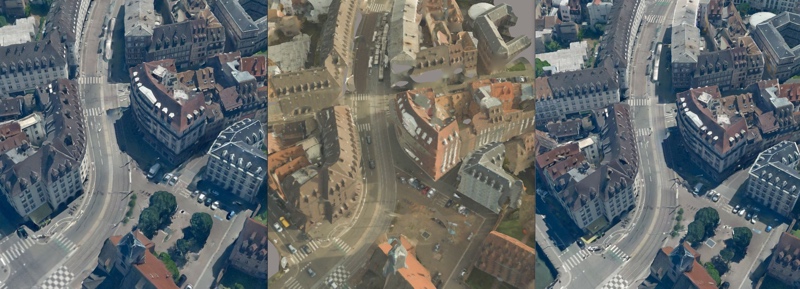

An example of ContextCapture photo planning leveraging existing 3D map data for the aerial data acquisition mission. Image courtesy of Bentley Systems and City of Strasbourg

Valois explains that users can now capture data with a wide variety of devices, each suited for a different purpose. For example, if you want to capture the existing conditions of a train station, you could use aerial lidar for the wider context, drone photography for high-quality color visuals of the structures, and a combination of photos and indoor lidar to capture the indoor conditions.

After capturing, you can process your data sets with ContextCapture and it combines them into a single 3D reality mesh.

This file format gives you the best of both photogrammetry and lidar in one place. “We’re able to increase the accuracy of the photos based on the point clouds,” Valois says, “but there are also places where the photo might be a better fit, depending on the constraints. Combining the two, you get a hybrid 3D reality mesh that is very easy to consume and use for different engineering purposes.”

ContextCapture Photo Planning

Since photogrammetry solutions like ContextCapture make processing easy, one of the only remaining challenges is capturing the photos properly to ensure a high-quality result. To make this easier for UAV and ground photogrammetry, Bentley is releasing the ContextCapture photo planning application.

You simply tell the software what you want to model, and it proposes a set of optimal camera positions for getting the best results.

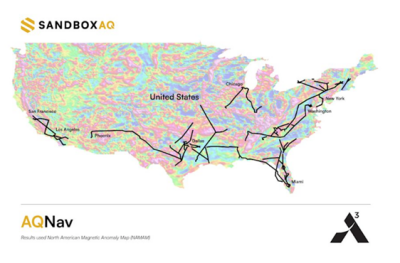

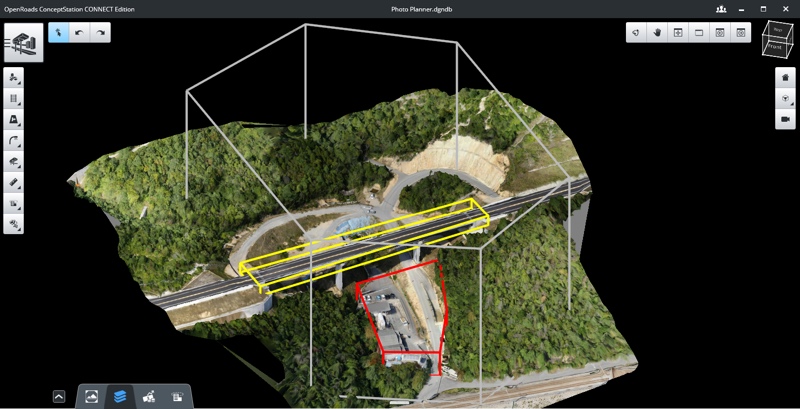

An image in ContextCapture photo planning that shows operation (gray), target (yellow), and forbidden (red) zones. Image courtesy of Bentley Systems

To illustrate, here’s an example workflow. You are using UAVs to model a complex object like a bridge, so you open photo planning and create a quick, approximate 3D model of the bridge. Next, you set up constraints like no-go zones for airports, and set the resolution you’d like to achieve. When you’re done, the software calculates the best camera positions for getting the model you want and pushes this information out to your flight planning application—say, the one you use for your Topcon or DJI drone.

For the final step, a field worker flies the drone and snaps the pictures, modifying the flight plan as necessary to adapt to unforeseen conditions in the field.

ContextCapture photo planning illustrating computed shots for optimal proposed path-to-flight planning applications. Image courtesy of Bentley Systems

“At the end of the day, you’re going to get a reality mesh that’s properly reconstructed, and at the proper resolution,” says Valois. “That’s key, because the resolution is a function of the camera, the distance, and so on, and some of these settings are fairly complex for most human beings to figure out. So, we built the software to do that for our users.”

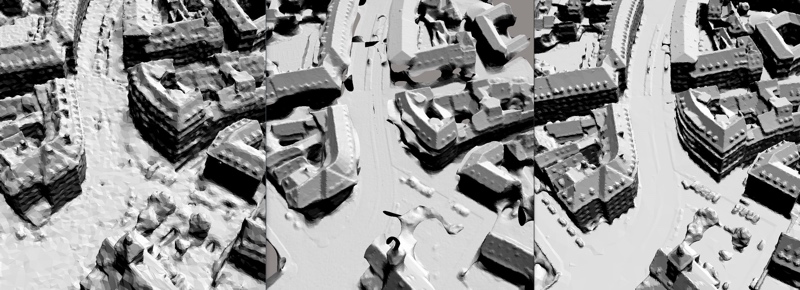

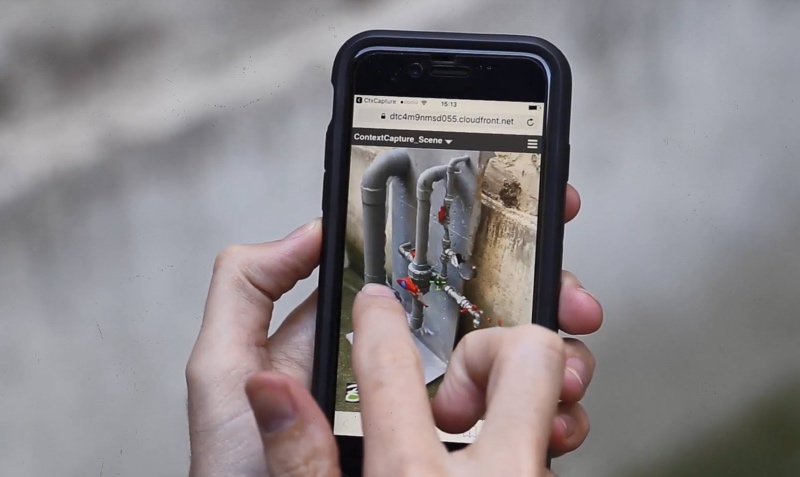

An image of an accurate 3D engineering-ready reality mesh using the ContextCapture mobile application. Image courtesy of Bentley Systems

ContextCapture Mobile

If you want to capture smaller objects, you’ll be interested in Bentley’s new ContextCapture mobile photogrammetry app.

I tested the app in a pre-release version at Bentley’s Year in Infrastructure 2016 Conference, and it makes the documentation of as-built conditions very simple: Point a mobile device at an object, and snap a bunch of photos from different positions. In a minute or two you receive an email with a link to a detailed reality mesh that you can download, share, or store online for later access.

Valois tells me Bentley has since added a function that uses the phone GPS to scale the model to the proper size. The developers have also added a workflow for increasing the accuracy of the model scaling, or even scaling indoors where GPS reception is unavailable.

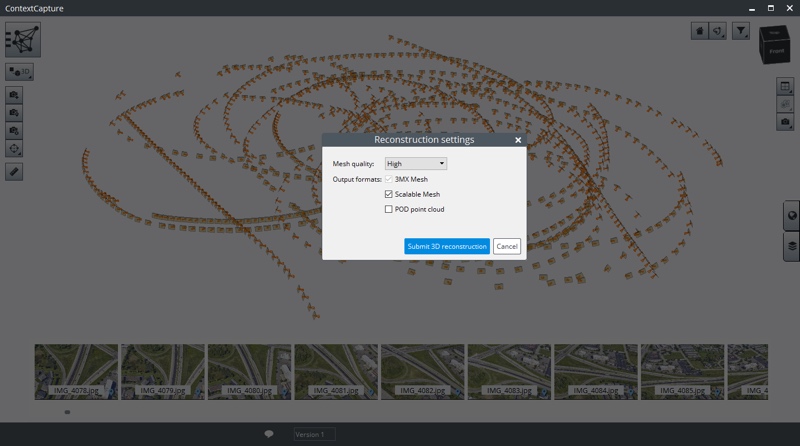

ContextCapture Cloud

As you know, a mobile device doesn’t have the computing power to handle complex photogrammetric reconstruction. This is why Bentley’s mobile app uses a new ContextCapture cloud service for processing.

But the cloud processing isn’t limited to mobile users. Bentley is also releasing a simple console application to drive the service called ContextCapture cloud processing service. This app offers an array of processing options for smaller and mid-sized projects, including reality meshes, orthophotos, digital surface models, and point clouds. Valois says, “It’s ideal for users who perform photogrammetry with handheld devices or UAVs, but have no access to an expensive server farm for processing.”

Submitting a reconstruction for processing using ContextCapture cloud processing service. Image courtesy of Bentley Systems

If you have a small project, you can pay to process it with a single engine. If you have a medium-sized project and want to process your data faster, you can pay to use more engines and cut down the total time.

Valois tells SPAR 3D this should be a welcome update for those with mission-critical applications. He gives the example of a contractor at a construction site who needs a report done by 4:30 p.m. the same day. “He cannot wait on photogrammetry processing to complete it in 10 hours, so the cost is less important than time. That’s why we added the scalability—to be more elastic.”

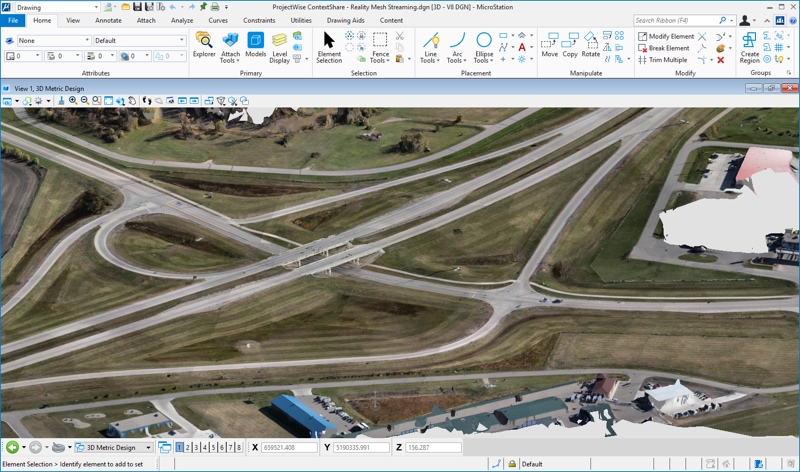

ProjectWise ContextShare

Capturing and processing 3D reality data has become much easier in recent years, but sharing large reality data sets among project stakeholders remains a big challenge for larger organizations. Bentley has a new solution to this problem called ProjectWise ContextShare cloud service.

This cloud-based service hosts all the reality data sets related to your project (no matter what tool you captured them with) and makes them easy to use in other Bentley applications. It’s compatible with MicroStation, OpenRoads and an ever-growing number of CONNECT Edition applications in the Bentley ecosystem.

Share and consume engineering-ready reality data within Bentley software solutions using ProjectWise ContextShare cloud service. Image courtesy of Bentley Systems

ProjectWise ContextShare’s greatest benefit is that it enables users to securely manage increasingly large data sets without investing in expensive IT infrastructure.

Since data security is a dominant concern, Bentley supplies a web interface that acts as administrative center for your data. There, you can view your reality data sets and set permissions to enable secure access for other users. If you’re an owner-operator, you can make certain data sets available to your consultants—and only that data. Consultants can use the service to make the data available throughout their organizations.

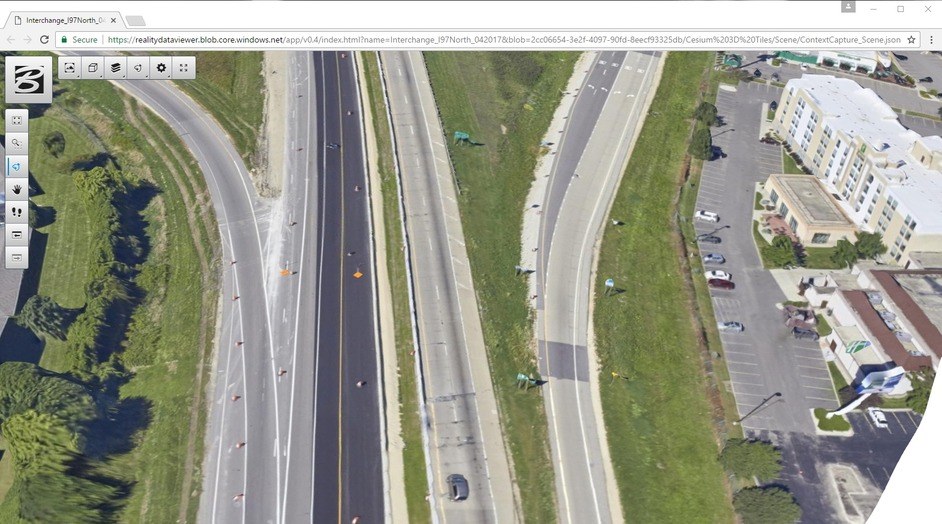

ProjectWise ContextShare offers users a number of ways to access reality data. You can use any device, from desktop stations on premise to mobile devices connected to the cloud. Simply search for data by project or geographic location, then download it to work with it locally, or stream the data set if it’s too big to transfer.

Share and consume engineering-ready reality data using the ContextCapture web application within ProjectWise ContextShare cloud service. Image courtesy of Bentley Systems

Next-Generation 3D Reality Meshes

“When you’re working with these reality meshes,” Valois says, “you’ll find that the format has become much easier to consume.”

The new reality meshes are now natively “engineering ready,” meaning that users can pull it up in 3D at full resolution, and place roads, calculate quantities, or perform other engineering work right on top of it, without data conversion. In addition, Bentley’s reality meshes are also “visualization ready.”

“Before, you had to decide whether to do engineering or visualization,” Valois says. “Now you can do both. So, that means Bentley LumenRT can take these next-generation reality meshes at full resolution and put them bit by bit into the GPU. With the next-generation reality mesh, you can use the full resolution model in the real-time visualization engine.”

If you’re working on a large engineering project, you can use a single reality mesh file to preview your project in its reality context, design your project against real-world conditions, and then visualize it for presentations. The next-generation reality mesh enables all of these use cases.

For more details about Bentley’s reality modeling portfolio, and the company’s latest advancements, visit the company in booth #201.