Earlier today, Apple announced that their newest iPad Pro model tablets would go on sale with a suite of new features and tools. Rather than sticking to depth-sensing cameras, Apple has incorporated a lidar sensor on board the iPad itself – a first for Apple.

The two new iPad Pro models that have a lidar scanning onboard are the 11 and 12.9-inch models, and are designed to enhance 3D photography as well as support developers that are using ARKit for the development of augmented reality (AR) apps.

Rather than using a third-party lidar, they seem to have developed their own proprietary version that they are simply referring to as “LiDAR Scanner.” It has a reported range of 5 meters, and “operates at the photon level at nanosecond speeds” which, one can assume means it is some flavor of the small lidar sensors that we’ve been hearing a lot about lately. The benefit of this range might be to fill in smaller, more detailed areas that larger-scale scans have difficult with.

Fundamentally, the iPad Pro’s lidar is used to create depth mapping points that, when combined with camera and motion sensor data, can create a “more detailed understanding of a scene” according to Apple. With the help of the lidar sensor, the ARKit app can get better placement of real world and augmented reality objects, better motion capture, and occlusion – all of which help an augmented reality experience seem even more realistic. A scene does not need a long “scanning” period before AR objects can be placed, which makes for a more seamless integration of AR elements. API developers can also use the new lidar scanner to push beyond what’s currently possible using only depth cameras.

The depth sensing from lidar, combined with the dual depth cameras is getting pushed through sophisticated computer vision algorithms. In the practical sense, that means that cumulatively, with the help of software, this can be a very powerful scanning platform. Apple has marketed these advances as focusing on the development of augmented reality (AR) applications and experiences on the iPad, but with the ARKit available for others to tweak, the possibility of other scanning abilities is not far away. With both Apple apps and third-party apps able to utilize this new depth mapping capability, I would not be surprised if we are on the cusp of some major announcements from some of the bigger 3D scanning players about partnerships or new apps to take advantage of the sensor.

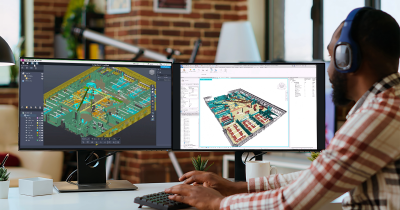

According to Apple, later this year, Shapr 3D, a professional CAD system based on Siemens Parasolid technology, will use the LiDAR Scanner to automatically generate an accurate 2D floor plan and 3D model of a room. New designs can then be previewed in real-world scale using AR right in the room you scanned.

With the official integration of lidar on an iPad model, it would not be out of the realm of possibility for lidar to make it to other iOS devices in the future. It is rumored to be a feature of certain iPhone 12 models coming out later this year. If nothing else, lidar has been thrust into the mainstream conversation when it comes to technology, and it will be interesting to see how it is applied beyond Apple’s own apps.