This week in Denver, Colorado, some of the biggest companies in the world are coming together for SIGGRAPH 2024. The annual conference showcases the latest innovations in the world of computer graphics and all things 3D, which as one would expect encompasses a lot of industries. There are the ones that we are interested in, like manufacturing and other enterprise sectors that are utilizing tools like digital twins, along with others you’d expect like gaming and entertainment.

Unsurprisingly given their place in seemingly everything today, NVIDIA is making the biggest impression at this year’s event. CEO Jensen Huang has been front and center for a couple of the biggest sessions, sitting down for a fireside chat with WIRED Senior Writer Lauren Goode, while also taking the stage in a separate session with Meta CEO Mark Zuckerberg. In addition to the marquee speaking sessions from their CEO, NVIDIA also made a series of announcements early on in the week.

These announcements run the gamut and touch a lot of different industries, but two in particular are of interest to those in enterprise spaces either looking to get into the 3D visualization and simulation space for the first time, or those who are already using these technologies but looking to take their usage to the next level.

In one announcement, NVIDIA introduced fVDB, which they describe as “a new deep-learning framework for generative AI-ready virtual representations of the real world.” The company also announced advancements to the Universal Scene Description (USD) that they say will “expand the adoption of the universal 3D data interchange framework to robotics, industrial design and engineering, and accelerate developers’ abilities to build highly accurate virtual worlds for the next evolution of AI.”

Starting with the introduction of fVDB, NVIDIA believes that this will unlock new levels of achievement possible in the world of generative physical AI, which they define as what “enables autonomous machines to perceive, understand, and perform complex actions in the real (physical) world.”

fVDB is built on OpenVDB, and translates real-world data collected by photogrammetry, NeRFs, or lidar into massive, AI-ready environments rendered in real-time. NVIDIA points to increased scale (4x larger than prior frameworks), speed (3.5x faster), and power (10x more operators than prior frameworks), as well as interoperability as the key advantages of fVDB. The hope is that this new framework will open up new and/or easier possibilities for large-scale digital twins and powerful simulations for things like city planning, disaster response, and smart city development.

Along with the announcement of fVDB, NVIDIA also announced new generative AI capabilities built for OpenUSD. We’ve discussed OpenUSD quite a bit on Geo Week News, including with a webinar held earlier this year with experts from the Alliance for OpenUSD.

In this most recent announcement, NVIDIA – who is among the founding members of the Alliance for OpenUSD – new generative AI models and NIM Microservices, which are designed to accelerate the deployment of generative AI models, for the 3D data interchange framework. In their announcement, the company says that the new offerings include microservices for AI models that can “generate OpenUSD language to answer user queries, generate OpenUSD Python code, apply materials to 3D objects, and understand 3D space and physics to help accelerate digital twin development.”

Right now, the following microservices are available in preview:

USD Code NIM microservice — answers general knowledge OpenUSD questions and automatically generates OpenUSD-Python code based on text prompts that can then be inputted into an OpenUSD viewing app, such as usdview from Pixar, or an NVIDIA Omniverse Kit-based application to visualize the corresponding 3D data.

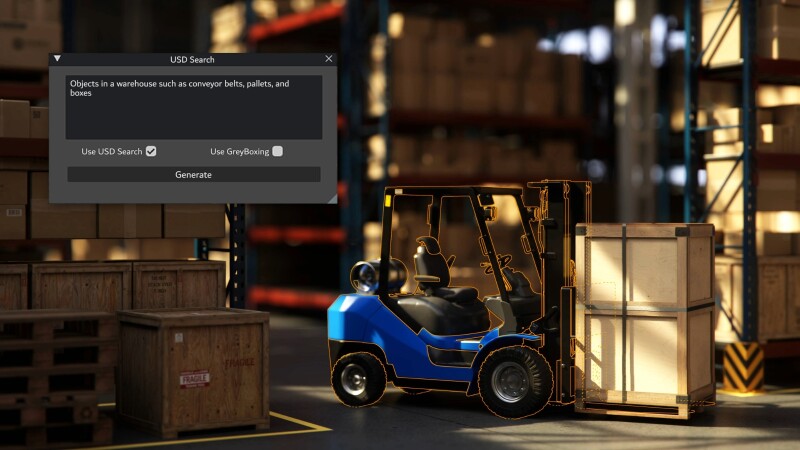

USD Search NIM microservice — enables developers to search through massive libraries of OpenUSD, 3D and image data using natural language or image inputs.

USD Validate NIM microservice — checks the compatibility of uploaded files against OpenUSD release versions and generates a fully RTX-rendered, path-traced image, powered by NVIDIA Omniverse Cloud APIs, or application programming interfaces.

Additionally, the following microservices are going to be available “soon”:

USD Layout NIM microservice — enables users to assemble OpenUSD-based scenes from a series of text prompts based on spatial intelligence.

USD SmartMaterial NIM microservice — predicts and applies a realistic material to a computer-aided design object.

fVDB Mesh Generation NIM microservice — generates an OpenUSD-based mesh, rendered by Omniverse Cloud APIs, from point-cloud data.

fVDB Physics Super-Res NIM microservice — performs AI super resolution on a frame or sequence of frames to generate an OpenUSD-based, high-resolution physics simulation.

fVDB NeRF-XL NIM microservice — generates large-scale neural radiance fields in OpenUSD using Omniverse Cloud APIs.

There is a lot to digest in these announcements, but the broad takeaway is that developing physically accurate digital twins, particularly those for simulation purposes, is now more accessible than ever before. In their announcement of the USD-based developments, NVIDIA points to a pair of case studies showcasing the power of their announcements. In one, marketing and communications company QPP used USD-based generative AI content creation for customers like Coca-Cola. They also point to manufacturer Foxconn, who is using NIM microservices and NVIDIA’s Omniverse platform to create a digital twin for a factory currently under development.

“The generative AI boom for heavy industries is here,” said Rev Lebaredian, vice president of Omniverse and simulation technology at NVIDIA, in a statement. “Until recently, digital worlds have been primarily used by creative industries; now, with the enhancements and accessibility NVIDIA NIM microservices are bringing to OpenUSD, industries of all kinds can build physically based virtual worlds and digital twins to drive innovation while preparing for the next wave of AI: robotics.”