The biggest breakthrough in mapping technology in the last few years is probably artificial intelligence. Web developers can now leverage Esri’s basemaps and other web mapping services in combination with AI-capable APIs, including those of Google, Microsoft, and OpenAI. The following provides a selection of web apps combining Esri’s web mapping services and AI APIs created by Courtney Yatteau, a developer advocate with Esri, using HTML, CSS, and JavaScript. A series of videos showing both the final app and code examples can be found on her YouTube channel.

This first example shows how to create a simple web app with the ArcGIS Maps SDK for JavaScript and integrate AI to generate facts about clicked locations using OpenAI. The final app shows a web map in a browser on a pre-defined area. When clicking anywhere on the map, the longitude and latitude coordinates are passed automatically to OpenAI, which generates the name of that location and a fact, such as historical information. Both are visualized in the browser via a popup.

A similar example offering the same functionality uses Esri Leaflet and the Google Gemini API. Esri Leaflet is a lightweight, open-source Leaflet plug-in for accessing ArcGIS Location and ArcGIS Enterprise services, while Google Gemini is a generative AI chatbot.

By combining Esri’s demographic data and OpenAI’s capabilities, Yatteau created an interactive planning app. When a user clicks on a map inside the browser app, the location coordinates are passed to OpenAI with the demographic data coming from an Esri REST API, as well as a request to generate urban planning suggestions for that location based on the demographic data, which helps OpenAI generate intelligent recommendations.

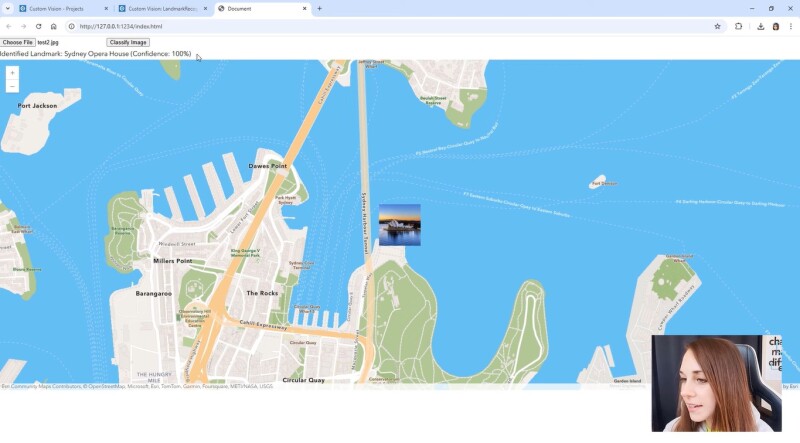

Azure AI Custom Vision is an image recognition service for building, deploying, and improving image identifier models. Yatteau uses it to classify landmark images and map the result with ArcGIS. In addition to building an app to display the results of the image classification, Custom Vision needs to be set up, which requires a user account, creating a new image classification project, and uploading images.

In the example video, landmark images of the Colosseum in Rome, the Taj Mahal, and the Sydney Opera House are uploaded to train the AI model. This model is used two times: first to train a model, and next to classify additional landmark images uploaded to the app. If the model “recognizes” the landmarks, they are put on their location in real life.

AI is able to analyze elevation data to classify the difficulty of different walking paths and display the results in a mapping application. The app suggests a travel route based on two user-defined points on a map and a travel mode of choice. By incorporating the TensorFlow.js machine learning library into the web application, a path’s difficulty is calculated using elevation data and the slope of the route. The app fetches route data from the ArcGIS Elevation service, applies a trained TensorFlow model for prediction of the route difficulty, and displays the route in a 2D model, elevation details, and the maximum slope.