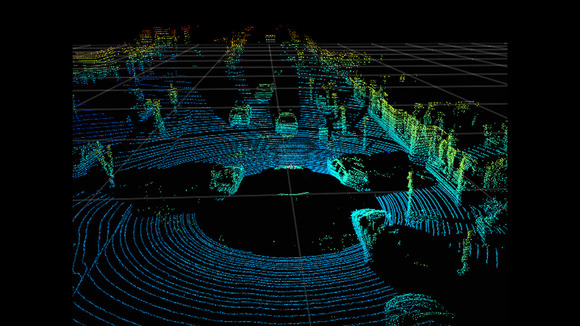

When I write about 3D capture, I tend to think of optical sensors. When I write about visual data in the fourth dimension—time—I tend to think of scheduling. These are biases I developed over years of focusing on lidar and photogrammetry, and the way users apply these technologies to static objects over the time scale of a large, slow-moving project. In 2018, when the autonomous vehicle market segment has grown up to match and even overtake this market in size, I’ve had to start thinking about 3D a bit differently.

The incredible demand for autonomous vehicles has driven the development of different kinds of sensors, because, as our long-suffering blogger Will Tompkinson is fond of arguing, you can’t just take a sensing technology from one application and apply it to another without a second thought.

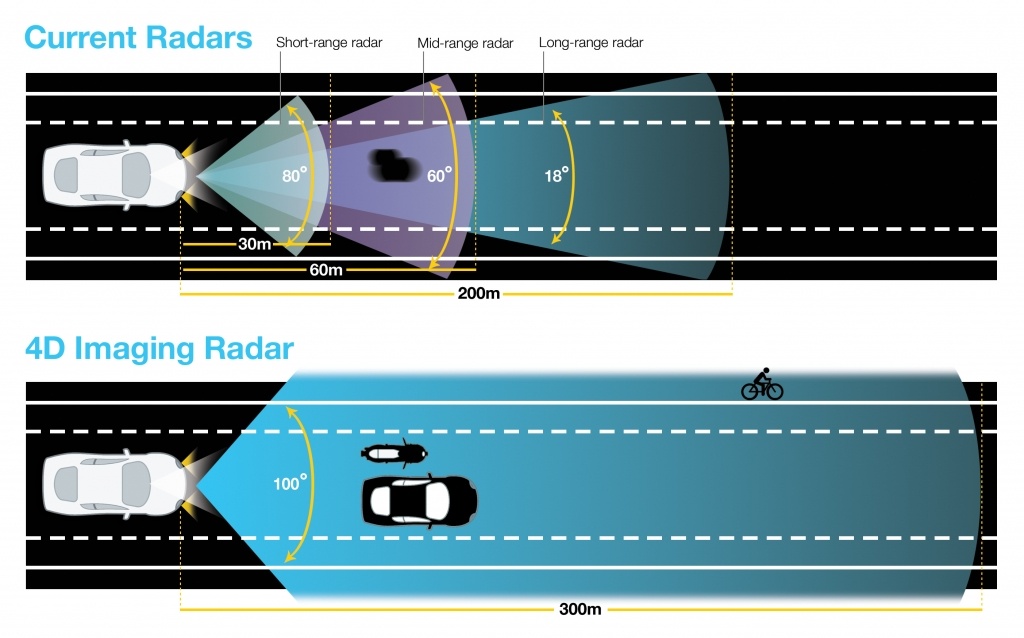

One such novel is the 4D imaging radar, which uses echolocation (like a dolphin, or bat, or some humans) and the principle of time of flight measurement to capture a space in 3D. Furthermore, they’re designed to image in the time scale of a fast moving automobile or a zooming drone.

Radar v. lidar

A recent article in Sensors Online lays out a pretty convincing argument that these 4D sensors are absolutely crucial to achieving level 4 and level 5 automation for passenger vehicles. In the article, Kobi Marenko, CEO and co-founder of Arbe Robotics, explains why optical sensors can’t get us to levels 4 and 5 without the help of 4D imaging radar.

Before we go any further, here’s a quick primer on levels of automation:

- Level 0 describes no automation, like the car you’re probably driving now: You control everything yourself, and the car does nothing for you.

- Levels 1-3 add various degrees of automation, with Tesla’s autopilot falling somewhere between level 2 and 3, since it can steer, accelerate, brake, and sometimes manage the driving itself (though it’s not supposed to).

- Level 4 means the car can operate without driver control, but only in certain conditions. Think of an autonomous vehicle that runs on a college campus, for instance.

- Level 5 autonomy means the car can drive itself, completely and fully—kick back and take a nap while your Audi gives you a ride home.

Here’s why radar matters. In contrast to cameras and lidar, 4D imaging radar works in all conditions, offering “the highest reliability in various weather and environment conditions, including fog, heavy rain, pitch darkness, and air pollution.” It also has the benefits of sensing out to 300 meters—a requirement for higher levels of automation—and capturing doppler shifts, which show whether an object is moving toward the vehicle or away.

If Marenko’s claims are true, the 4D imaging radar can tell when a car is moving at the same speed as you, 900 feet in the distance, in the white-out conditions of a full snowstorm. It can also sense when that car slows down, or stops abruptly, and act accordingly.

He also argues that 4D imaging radar puts “a special focus” on object separation by elevation, which can help the car decide whether a stationary object up ahead is a tree branch or a person.

Automotive lidar

Sensor packages

Note that Marenko doesn’t argue that 4D imaging radar can handle the task of autonomy on its own. He sees it working only as part of sensor package that includes optical sensors. This is the autonomous car version of the shopworn refrain in 3D capture that “each technology is another tool in the tool chest,” but the concept still holds true.

“The 4D imaging radar’s ability to detect at the longest range of all sensors,” he writes, “gives it the highest likelihood to be the first to identify danger. It can then direct the camera and lidar sensors to areas of interest, which will considerable increase safety performance.” At this distance, and for this purpose, the accuracy of the sensor can be relatively low.

His most convincing argument comes last: cost. The cost of the whole autonomous sensor package will need to drop below $1000 to be commercially viable. Using a 3D imaging sensor in this way, he argues, can help us achieve level 3 autonomy “and higher without the need for more than lidar unit per vehicle for redundancy.”

Scandalously, he says 4D imaging radar might free us of the need for lidar entirely. Whether that proves to be true, only time will tell.