Smartphones, though they might seem like toys compared to more precise engineering-grade equipment, have played a pivotal role in the 3D technology marketplace. For instance, the tiny devices have helped to invigorate photogrammetry, with software like Autodesk’s 123D Catch and Acute 3D’s new Smart3DCapture Free Edition making 3D capture available to anyone who can snap a few pictures with their iPhone.

But the idea of using the pocket-sized device you already own to perform non-photogrammetry 3D capture has long seemed like an impossible notion. Recent developments, like tablet solutions from DotProduct, Paracosm, Mantis Vision, and Google’s hotly anticipated Project Tango, have pushed us closer, but each of these still require the purchase of a whole new gadget, some of which are totally out of the price range of the average consumer or prosumer. However, some exciting new research from Microsoft shows that turning your existing smartphone camera into a 3D camera is possible, and much more easily and affordably than you might think.

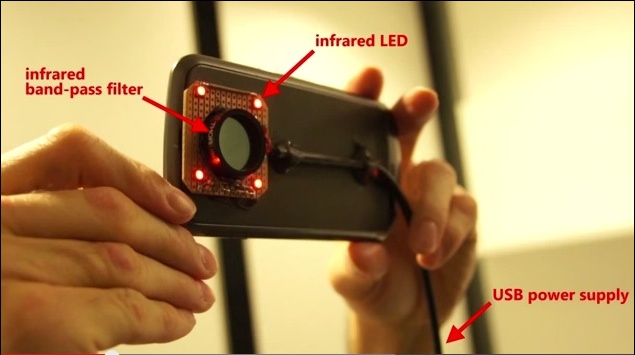

According to the MIT Technology Review, a team of Microsoft researchers accomplished this feat simply by tweaking the existing cameras on a bunch of Samsung Galaxy Nexus phones. They did this by first removing the near infrared filter, which is “often used in everyday cameras to block normal unwanted light signals in pictures.” In this case, the light is exactly what they wanted the cameras to pick up, so they then added “a filter that only allowed infrared light through, along with a ring of several cheap near-infrared LEDs. By doing so, they essentially made each camera act as an infrared camera.”

The researchers are a little poetic (probably unintentionally) when they describe how this solution works to gather 3D data. The team says “it wanted to use the reflective intensity of infrared light as something like a cross between a sonar signal and a torch in a dark room. The light would bounce off the nearby object and return to the sensor with a corresponding intensity. Objects are bright when they’re close and dim when they’re far away.”

Altered smartphone camera captures 3D model of human hand. Source: MIT Technology Review

It doesn’t have the accuracy of a LiDAR rig, obviously, but as the abstract for the paper explains, it obtains results comparable to “high-quality consumer depth cameras.”

According to Microsoft’s team, that LiDAR level of accuracy isn’t really the point anyway. “The idea,” the article explains, “is to make access to developing 3D applications easier by lowering the costs and technical barriers to entry for such devices, and to make the 3D depth cameras themselves much smaller and less power-hungry.”

It’s hard to overstate just how revolutionary these incremental decreases in cost and size can be–just remember how many more photographs you’ve seen and taken since you bought your first smartphone.

Enticingly, this project also teases a parallel advance in machine learning, as the team was able to build up a set of training data that allowed them to measure human hands and faces according to the properties of the skin’s reflection. “The huge amount of training data allows the machine to build enough associations with the data points in the pictures that it can then use additional properties of the image to estimate the depth.” This solution, they say, was developed for hands and faces, but could theoretically work for anything.

The project hints at a time in the near future where you could buy a small, cheap add-on for your smartphone that turns it into a 3D sensor, and then teach your phone to recognize important information about the objects you’re scanning. Imagine that–actionable data from the same device you use to play Angry Birds.

Click here or hit play below to see the technology in action.