Two of the biggest forces for technological innovation in the 3D tech space are smartphones and autonomous vehicles. Apple has significant financial interest in both, so it shouldn’t come as a surprise that the company has its hands deep in some feature-recognition research that could end up being a pretty big deal.

In a paper released last month on arXiv, Apple engineers Yin Zhou and Oncel Tuzel detailed an end-to-end deep neural network solution for accurate feature recognition in point clouds.

But first, here’s the problem they were trying to solve: Though accurate detection of 3D objects in point clouds is necessary for applications like autonomous navigation, robotics, and AR/VR, most of our current solutions fall short.

One way we currently perform feature recognition is to project the point cloud into a perspective view and apply image-based feature recognition techniques. Another approach is to rasterize the point clouds into a 3D voxel grid, and encode the features by hand (think of a voxel as a pixel in three dimensions instead of two). The first approach loses us some valuable depth information, and both create an extra step. According to the researchers, this “informational bottleneck” keeps us from “effectively exploiting 3D shape information and the required invariances for the detection task.”

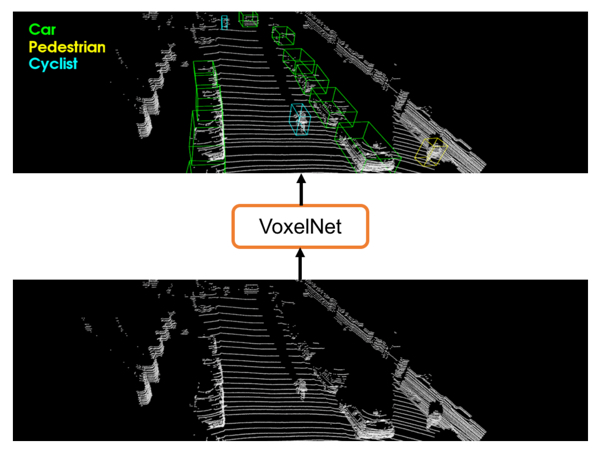

Their solution is to scale up a deep neural network with a /definitely/ not-creepy name, VoxelNet. VoxelNet is able to process sparse point clouds and recognize features without requiring manual input. (But how long before VoxelNet becomes sentient?)

Their solution is to scale up a deep neural network with a /definitely/ not-creepy name, VoxelNet. VoxelNet is able to process sparse point clouds and recognize features without requiring manual input. (But how long before VoxelNet becomes sentient?)

The network’s big trick is simultaneously learning how to discriminate features in a point cloud and predicting how to bound that feature in a 3D box. This allows the network to divide the point cloud into voxels, encode the voxels using “stacked VFE layers,” and then generate a volumetric representation.

TL;DR: The deep learning network learns how to break features down into voxels, and then relates the right ones together to create 3D representations of each feature.

Too short, I need more detail and I love complex math: Read the paper here.

According to the researchers, the results are promising. VoxelNet “outperforms state-of-the-art lidar based 3D detection methods by a large margin. On more challenging tasks, such as 3D detection of pedestrians and cyclists, VoxelNet also demonstrates encouraging results showing that it provides a better 3D representation.”

If the network can recognize cyclists in a sparse point cloud captured by a moving car, couldn’t it recognize features in a point cloud gathered by a cell phone, a handheld scanner, or a terrestrial scanner? There would certainly be a lot of uses for that.