Many things that are easy for use to do are devilishly difficult to teach to a computer. Where you have no trouble identifying pictures of cats, for instance, it has taken decades of work to develop an artificial intelligence that can do the same. This is why you might be surprised to learn that researchers have developed a method for teaching AI to extrapolate 3D objects from 2D pictures.

As described in Science, the researchers first taught an algorithm to translate 3D objects into 2D maps:

“Imagine, for example, hollowing out a mountain globe and flattening it into a rectangular map, with each point on the surface displaying latitude, longitude and altitude.”

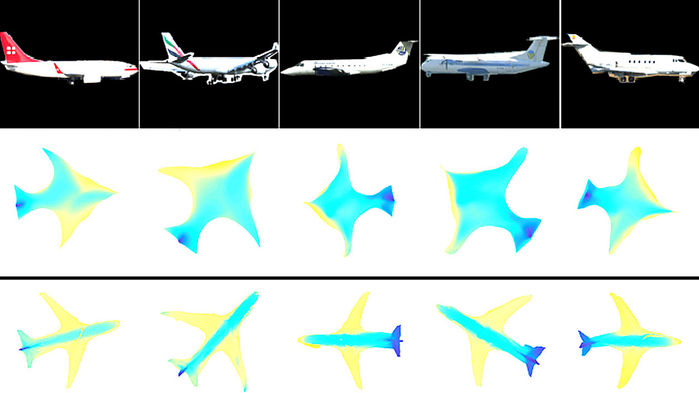

After the algorithm got the hang of that step, the researchers taught it to translate those 3D objects into a series of flattened representations that could be stitched together into 3D forms.

Considering that the program, called SurfNet, can already flatten and then reconstruct cars, planes, and human hands, it’s clear that it represents a breakthrough for computer processing of 3D data. But the most important part is yet to come, as the program could one day go the extra step to generate 3D renderings of objects from their 2D representation in photos.

SurfNet, in other words, could be an efficient method of generating 3D content, which would make it one of our best hopes for feeding the augmented and virtual reality headsets of the future.