Lately, we’ve seen wave of new software applications—experimental and commercial—that do amazing things with 3D data. Soon, we’ll be able to use these applications for everything from processing as-built 3D scans into a full BIM model without human intervention, to crowd-sourcing 3D data captured by autonomous cars and using it to detect changes in urban environments. We’re at a true turning point in the development of this industry.

There’s just one thing preventing us from getting there, says Dr. Robert Radovanovic of SarPoint Engineering. Current software applications all extract features and detect change differently, which makes it difficult for them to communicate real information to each other. In other words, these applications aren’t interoperable, which limits our ability to develop the workflows, processes, and 3D services of the future.

There’s just one thing preventing us from getting there, says Dr. Robert Radovanovic of SarPoint Engineering. Current software applications all extract features and detect change differently, which makes it difficult for them to communicate real information to each other. In other words, these applications aren’t interoperable, which limits our ability to develop the workflows, processes, and 3D services of the future.

Dr. Radovanovic participates in OpenLSEF, a Solv3D initiative, and working group that is developing standards for the extraction of 3D features. When I caught up with him, he explained how this standard is key if we want to bring the 3D tech industry to scale, and how we can use it to exploit the true potential of 3D data for service providers, end users, and even the general public.

Sean Higgins: Why do we need something like OpenLSEF to bring 3D technology to the next level?

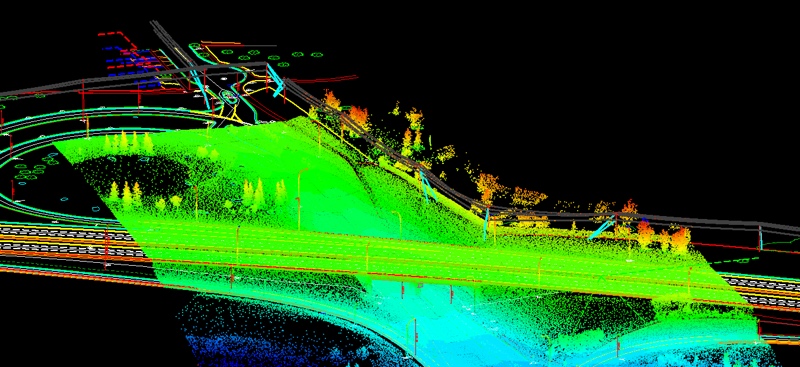

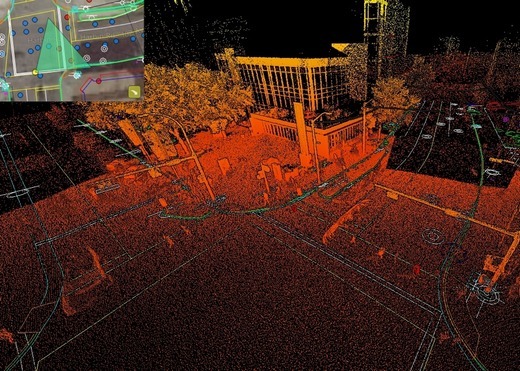

Dr. Robert Radovanovic: When I got into mobile scanning back in 2012, the big road block to creating any sort of usable product was a lack of efficient extraction tools. You could scan and make these big point clouds but you couldn’t create information from them. Flash forward five years to 2017 and the tools are better. There are a lot of ways to extract features from point clouds, and the new roadblock is getting any kind of consensus on what we’re actually supposed to pull out of this giant 3D cloud.

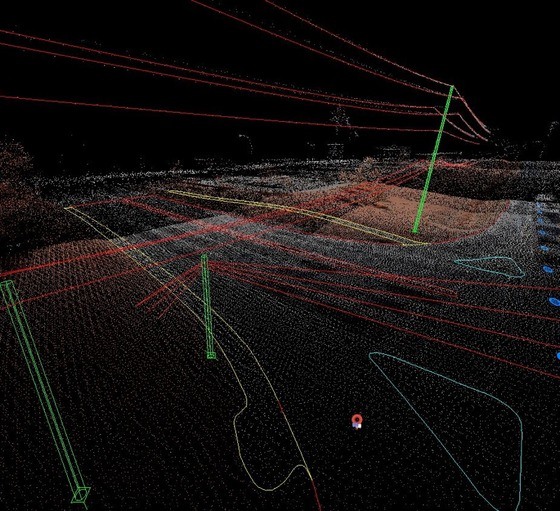

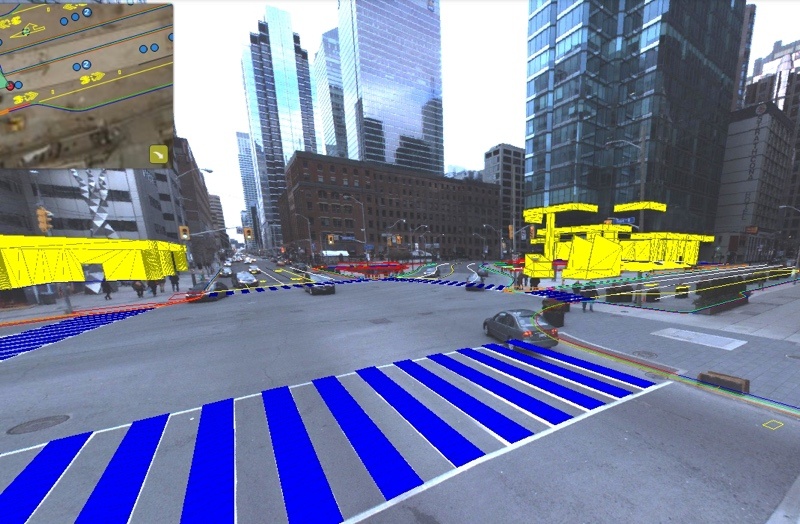

Do you define a stop sign at its face center? Or is it at the bottom of the post that’s holding it up? That sounds pretty pedantic, but if you’re a car and you’re cruising down the road you need that information to navigate. Or if I give a CAD file to a power line engineer and say, hey, I’ve extracted all the power poles, will they expect that the power poles are 3D models in that drawing? Or just vertical representations of where the poles are?

We really need semantic standards because a lack of common comprehension is stopping us from scaling the industry right right now. So that’s what OpenLSEF is all about. It’s a dictionary that aggregates all the most commonly extracted features and gives them definitions.

What do you mean when you say the industry can’t scale right now?

Everybody wants laser scanning to be disruptive. People can feel that laser scanning is a disruptive technology. The problem is that people want to build extraction tools but they don’t know what they’re supposed to extract. Or they want to set up extraction companies full of people that pull data from point clouds, but they don’t know what they’re supposed to extract. Or they want to build artificial intelligence to use and interpret this data, but they don’t know what to train the artificial intelligences on.

OpenLSEF draws together all these different disruptive industries and provides the playing field for them to have a common set of rules, so that they can shine and excel.

Would the OpenLSEF standard also help new companies entering the 3D capture and data processing space?

Absolutely. As a grizzled veteran of the mobile scanning world, it’s interesting to see new people get involved, particularly with UAVs. They are really, really psyched about the technology, then they hit a wall when they realize their business cannot survive on just generating point clouds because there is no end user for these point clouds.

So OpenLSEF can even give a roadmap: You got a drone, you need to be able to create these extractables so someone will buy your product. Then all types of things can happen. Data libraries can happen, you can have people aggregating scan data. As long as you know there’s a consistent product, you can sell it.

It sounds like this standard could do more than give providers a common language. If I understand it correctly, it could also give emerging technologies a common language to communicate with each other. For instance, it sounds possible we could do things like urban change detection based on data gathered from, say, autonomous vehicles…

Exactly. In an ideal world, you could have a laser scanning provider create a high definition map of an urban landscape. From there, an automated feature extraction tool kit would pull the features of the raw scan to create the high-definition map compliant with the OpenLSEF standard. Later, a car traveling down a road would be using lidar to scan its environment and extract OpenLSEF-compliant features, which it would compare to the features from the original model.

Again, the standard acts as a common platform for all the technologies to share meaning between each other.

What other sort of “ideal world” applications do you do imagine that the standard will enable?

A big one for us is engineering design. In fields like BIM and architectural modeling, the big limiter to the adoption of scanning is the cost associated with extraction of CAD features. As long as people need CAD features to design in, someone is going to have to extract them.

As long is there is not a common grammar, no businesses can draw upon efficiencies of scale, because you can’t just repeat the work. I could not become the world’s greatest curb extraction house if every single job requires me to extract curbs differently.

I see this being a real enabler for service providers, because now we can do cool things like collecting data on spec, and making data libraries where anybody can go and know that what they’re downloading will be to a common standard. That way they can expect what they’re getting. The ASPRS LAS classification definitions, for example, were massive for the growth of the aerial lidar industry.

It sounds like this standard will have huge benefits for end users.

As a data collector and extractor, I can tell you, there’s a wide variety of expectations from the end user. People have these awesome, oversold views of what they’re going to do with the 3D data.

It’s like the seven stages of grief in reverse. The first stage is, We’re going to get this 3D point cloud, and it’s going to be amazing because it has everything in it. The second stage is, We can’t do anything with just raw 3D point clouds beyond visualization. Next, they say OK, we’ll get a CAD file, but what do we actually want in that CAD file? And then the disappointment comes, because the extraction did not contain what they actually wanted because they did not have a standard to go to.

This standard will help will help these end users better understand what they need, and it seems like it could even help them to get what they need with knowing it ahead of time.

That’s why part of the working group is a strong focus on the end users and the engineers and the designers that will be using the because we don’t want the standard to be created purely from the data collector and information extractor viewpoint, we need end-user input to make sure that the standard is viable for the needs of the AEC community, BIM community, the power-line community, and the automotive industry.

Do you think do you think this is the first time in the history of 3D-scanning technology that that the sort of standard has been necessary, or viable?

I think so. I think the fact that we’re talking about OpenLSEF demonstrates that the laser scanning industry is moving fundamentally from a hobbyist industry to a serious industry. It’s one thing if your industry is comprised of experts who know the ins and outs of their hardware and create boutique pieces of software to generate bespoke product. But now the lowering cost of UAV providers, for example, is causing an exponential rise in the amount of scan data available. That means now the industry is moving to maturity, where we suddenly need a common lingua franca, so that everybody can start sharing this information that they’re producing.

Until that happens, the industry is an endless assortment of tailors that can charge a lot for products but have no ability to scale.

How the Standard is Defined

Let’s talk about the standard itself. How do you decide what the most commonly extracted features are? How do you decide what needs to be in this so-called dictionary?

It’s early days for OpenLSEF and right now we are building a working group. There’s a registration for the working group to pull together data collectors, extraction providers, designers, any other end users to sit down and flesh out the standards. So the version two that’s up currently is provisional and the hope is to put together 25 or 30 folks and go through and start coming up with what’s going to be on that list that assigning definitions to the items on that list.

What about the objects that are less common—Say, the kind of objects that an architect might want to extract but a civil engineer wouldn’t care about?

The structure of OpenLSEF will be primary definitions and then extensions, depending on the industry. For example, certain features are common to all industries. So curbs, DEM definitions, you can put those in the primary standard, which can be generalized.

Then there will be extensions for example, for architecture. We’ll ask, how do balconies get represented? Right now, we’re working on a very major project for a light rail transit project. And one of the big issues that we’re facing is how you define the edge of a building when you’re in an urban area. These buildings have overhangs and crenellations, so to what detail do we actually need to do this extraction? Left to their own devices, designers will go to the infinity scale. Suddenly costs explode and nobody wants to do scanning because it appears to be too expensive. But if I were a surveyor, I would never survey to the level of detail that these designers attempt to extract. So we need base standards.

We can also have level 2 definition for a building, similar to what’s in the BIM world. Then you could say, these were extracted to the first level of detail, or the second level of detail.

We had talked before about a low of new people entering the industry, so I wonder—Do the standards also account for accuracy?

OpenLSEF is starting out as any grammar for the extraction of features. However, we see two other critical points.

First, we see are standardizing the grammar of QA/QC description because mobile scanning is significantly different from all other forms of surveying that came before it. And so we’re working on asking—How do I share point clouds, and easily describe to you how accurate this point cloud is? We need some normalized metrics that anybody can look at and say OK, I can compare these two scan quotes and comprehend what the differences between them from a quality and consistency perspective. That doesn’t exist.

The second issue that we’re expanding into is the sharing of digital elevation models. One of the first things that has occurred as people started to create digital elevation models from scan data is that the models are huge, so we try to thin them. Well what does that mean? What’s the appropriate way to actually thin these point clouds to create the surfaces that people need? So we’re working on definitions and meaning so I can simply say, “Oh, well, this ground surface was thinned in accordance with OpenLCEF,” and you’ll know immediately what assumptions I’ve made. Are there break lines? Are there not break lines? How have the features been preserved?

For more information about the OpenLSEF working group, or to join, see the website.